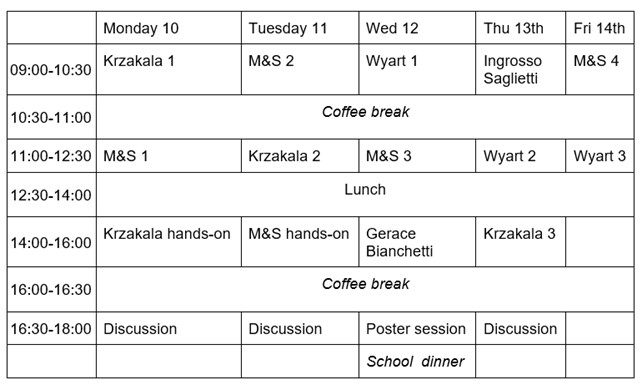

Program

Abstracts

Main Lecturers

- Francesca Mastrogiuseppe & Friedrich Schüssler

Recurrent neural networks as a model for dynamics and learning in neuroscience.

Dynamics and learning in brain networks are poorly understood. Artificial neural networks, both feed-forward and recurrent, serve as models that help to link experimental observations and theoretical ideas. Here we focus on recurrent neural networks (RNNs) as a model for neural dynamics. We explore different versions of such networks. First, we consider randomly connected RNNs as a canonical model to explore different dynamical states, phase transitions and the like. Second, we design low-rank networks as an interpretable framework for linking connectivity and computation. Finally, we analyze RNNs trained by gradient descent, showing how low-rank structures arise during training. We will also see how requiring dynamically stable dynamics leads to different training regimes than those observed in feed-forward networks. Overall, our course will serve as a point of departure to utilize RNNs as models of dynamics and learning in the brain.

- Matthieu Wyart

What in the structure of data make them learnable?

Abstract: Learning generic tasks in high dimension is impossible, as it would require an

unreachable number of training data. Yet algorithms or humans can play the game of go, decide what is on an image or learn languages. The only resolution of this paradox is that learnable data are highly structured. I will review ideas in the field of what this structure may be. I will then discuss how the hierarchical and combinatorial aspects of data can dramatically affect supervised learning, score-based diffusion generative models and self-supervised learning.

- Florant Krzakala

A taste of Machine Learning theory: Lessons from simple models

Topic Speakers

Topic Seminar I:

- Gerace

TBA - Marco Bianchetti

Learning Market Data Anomalies

Everyday market risk managers working in the financial industry manage a huge amount of market data, market risk scenarios and portfolios of financial instruments required to compute market risk measures, e.g. value at risk, both for managerial and regulatory purposes. Occasionally, some data may present anomalous values because of a number of reasons, e.g. bugs in the related production and storage processes, sudden and severe market movements, etc. Hence, it is important to integrate the daily data quality process with automatic and statistically robust tools able to smartly analyse all the available information and identify possible anomalies, since these “needles in the haystack” may, in some cases, affect the tail risk captured by market risk measures.

To this purpose, we tested different machine learning algorithms, i.e. Isolation Forest, Autoencoders, and Long-Short-Term Memory, to build a market anomaly detection framework which is general enough to cover different asset classes and data dimensionalities (curves, surfaces and cubes). Preliminary results on EUR interest rate curves suggest a better performance of LSTM which may capture the yield curve historical dynamics and allows a better reconstruction quality. On the other side, the results strongly depend on assumptions and model parameters, keeping crucial the human supervision to take appropriate remediation actions.

Topic Seminars II:

- Alessandro Ingrosso

A computation-dissipation tradeoff for machine learning at the mesoscale

The cost of information processing in physical systems calls for a trade-off between performance and energetic expenditure. We formulate a computation-dissipation bottleneck in mesoscopic systems used as input-output devices. Using both real datasets and synthetic tasks, we show how non-equilibrium leads to enhanced performance. Our framework sheds light on a crucial compromise between information compression, input-output computation and dynamic irreversibility induced by non-reciprocal interactions.

- Luca Saglietti

Inference of language models on a tree.